Resolving customer problems at scale is not an easy task, which is why the most feasible solution is implementing a chat feature; however, making these chat experiences unified and cohesive is a challenge in itself.

DoorDash customers often want to be able to talk to a customer support representative immediately. One of the mechanisms that we feature on our site to facilitate this communication is a text-based chat feature. In a previous blog post, we outlined how we integrated chat into the DoorDash app. Building on this foundation, we have extended that to include both live and automated support.

The general problems with our existing chat solution

Our legacy chat was fragmented across our different types of users (consumers, Dashers–our name for delivery drivers–and merchants), using multiple applications running on the different platforms (iOS, Android, Web). This division of customer applications also included support apps, which created a lot of duplication of effort and made it harder to adopt best practices in a centralized way.

One of the biggest issues with our legacy chat solution was its inability to pass along user data to a support agent. A user would be asked to fill out a form, then would be put into a queue to connect with an agent. The agent would then end up asking for the same information, even though the user had already filled out the form. We needed a way to pass along this context to the agent.

We also wanted to automate a lot of the support process so customers would not necessarily even need to talk to a support agent but could immediately get the information they needed through the app.

Building a better chat solution

We wanted to build one chat support platform for all of our customers in a way that would allow heavy reuse across platforms and ensure a consistent user experience.

Creating a better chat application involved building:

- Common UI components for all chat implementations at DoorDash

- An extensible backend system that allows for integrating with different third-party services

- A system to allow for high scalability and resilience

Building common UI components

We wanted to create a single chat implementation that would work with support as well as the Consumer to Dasher chat that is offered for each order. Recently, we upgraded our Consumer to Dasher chat using Sendbird. Before that change, when a Dasher wanted to reach a consumer they would send a message that the consumer would receive as an SMS message. This messaging was migrated to utilize Sendbird and embedded directly in the Consumer and Dasher app. We built on this work to create a single chat implementation, allowing us to have a consistent experience across all chat at DoorDash. It also allowed both flows to benefit from improvements and functionality made for the other one.

By defining all the components of past platform applications into a single library we could create a UI component system. We could then codify these into our application. While there was effort to create a UI for each of the different platforms (Web, iOS, Android), once created, we could reuse the component across the multiple use cases where chat is displayed for that platform. Whenever additional customization was needed we could rely on each platform having a single module that can be imported into the separate apps and customized as needed. This allowed us to build out the multiple use cases faster than if we had to create each use case independently.

Stay Informed with Weekly Updates

Subscribe to our Engineering blog to get regular updates on all the coolest projects our team is working on

Please enter a valid email address.

Thank you for Subscribing!

Building an extensible backend system

The backend system was built with multiple layers, allowing us to split responsibilities between internal services and third parties. Utilizing third parties for functionality such as chat, natural language processing (NLP), and agent ticket management allowed us to move quicker without having to build functionality that does not differentiate ourselves directly. We kept pieces that are core to our business logic, such as determining the expected reason for reaching out or determining possible resolutions to the customer in-house in our decision-making engine, leveraging existing work that had been done by other teams. By keeping control of the integration and customer journey, we are able to have visibility into the different chats and their usage and easily make updates.

To allow the platform to handle processing at common layers, we created a new API gateway for all the different callbacks from third parties. Since the processing does not need to be synchronous, we are able to push the messages to a messaging queue for processing to ensure we process the messages reliably. The downstream processing is then agnostic of the source and data-driven based upon the different events.

Solving problems through automation

As the improved support chat is now more deeply embedded into the core DoorDash ecosystem, we started to implement the automation mentioned earlier. We can look at the path the user took to open the chat as well as the current state to provide some immediate feedback to the user, which may both reduce their wait time to get desired information as well as reduce costs involving a human agent.

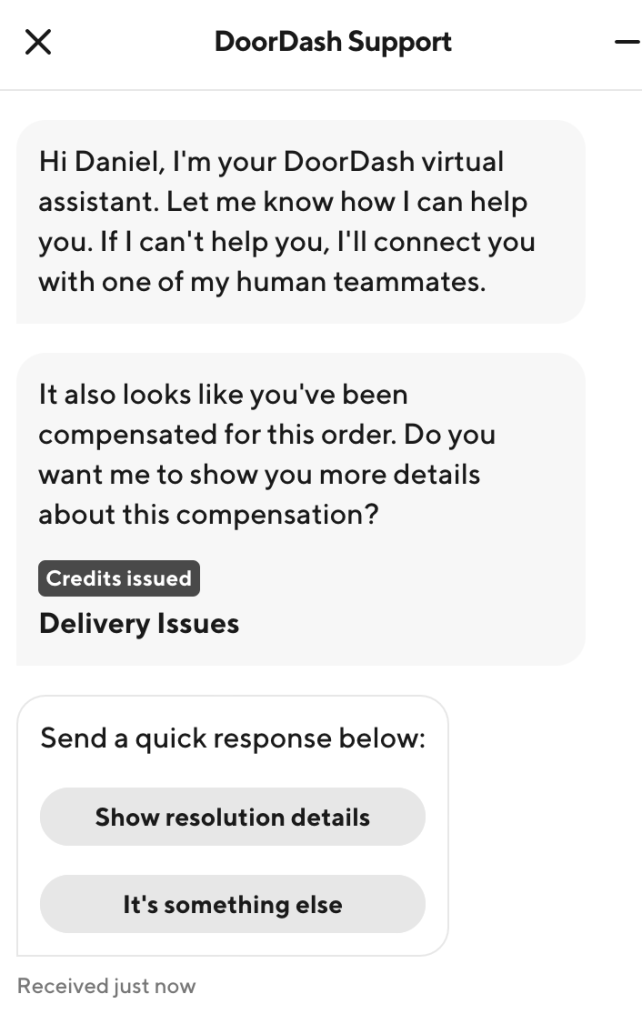

By integrating chat with our Decision Engine platform, we enabled operations to build in any functionality, including providing updates on the status of an order, issuing credits and refunds, and providing custom messaging. This will allow DoorDash to rely more upon automation and less on manual human operators. Of course, users can still escalate at any point in time and get the DoorDash support they expect.

In addition to allowing a user to select a predefined set of problems, we also let them type in their reason for contacting us. This then feeds to an NLP engine, which can allow kicking off appropriate workflows or escalating. This should also allow us to capture things such as the user sentiment and identify common issues, providing us with data on common followup scenarios that we should add.

Quick response pills were also provided, allowing the user to indicate common answers.

Rolling out the migration

We wanted to ensure a smooth rollout of the new chat solution so we started by rolling out with minimal functionality to a small subset of users, and then adding more functionality as well as users in subsequent ramps.

The first scenario we started with was the web experience, for the following reasons:

- There had been no chat functionality post-delivery, so we did not have to worry about breaking existing functionality while adding more functionality to the site

- The volume on web is lower than mobile, so there was lower risk

- We could iterate faster since we were not tied to mobile release cycles

Once it was live, we monitored multiple key performance metrics, including:

- Feedback from agents and customers

- The error rate

- A CSAT metric (customer satisfaction)

- How long customer problems took to solve (AHT = average handle time)

- The rate at which they would contact us again for the same issue (FCR = first contact resolution)

- The rate at which they would reach out to an agent (MTO = manual tasks per order)

Once we were confident in the experience and our performance metrics were doing well, we ramped automation and additional functionality quickly. These included uploading images, supporting deliveries while they are still ongoing, embedding into Cx and Dx mobile apps, allowing agents to transfer chats, and automation.

We utilized feature flags to ramp each independently so we could ramp up slowly or ramp down quickly in case any issues were discovered. Metrics were captured by our analytics system, and we were able to verify behavior.

Conclusion

We are already seeing benefits now that the updated support chat has been ramped on the web, is live for Dashers on mobile, and automation has been added around some of the responses.

- We have reduced escalation rates and improved CSAT.

- We are now able to automate away certain conditions, reducing the number of manual touch points needed per delivery.

- Customers are able to solve problems faster and without manual intervention, while still having the ability to escalate to a human.

- We have reduced the time needed to ramp additional automation from what had been there previously with common platform processing.

- With the common UI layers, we now provide a consistent branding / look and feel, making chat feel much more embedded into our applications on all the different platforms.