Running thousands of experiments effectively means carefully balancing our speed with the necessary controls to maintain trust in experimental outputs - but figuring out that balance is never easy. Traditionally, experimentation velocity tradeoffs are focused on minimizing the false positive rate (FPR), which heavily leans towards maintaining the status quo. For example, in Figure 1 we show the Type I (false positive) and Type II (false negative) error rates from typical experiments. In this traditional setup, the experimenter makes an explicit assumption that accidentally shipping a false positive has four times the risk relative to the opportunity cost of not shipping a completely valid treatment.

While this thinking has generally been the norm in both industry and academia, it creates certain challenges when it comes to product development. Specifically, it creates the following problems:

- It means doing fewer experiments with higher confidence requirements. These conditions reduce velocity and create serious difficulties for product development whose processes rely on a very rapid feedback loop.

- It makes experimentation less accessible as a tool for companies and teams that don’t have large sample sizes but have a lot of ideas they want to test.

- It ignores that the conventional criteria for decision-making are simply guidelines and error rates should be set on a case-by-case basis. For example, Jacob Cohen, who was an American psychologist and statistician best known for his work on statistical power and effect sizes, was very open that his guidelines for thresholds are “values chosen [that] had no more reliable basis than my own intuition” (pp. 532, Cohen, 1988), and that a researcher should create their own guidelines when it is warranted.

This post will highlight a few ideas for driving successful experimentation and how to balance velocity with experimentation confidence:

- Greater velocity drives impact: In this section, we’ll illustrate why increased velocity can be a big driver of impact and why it is useful to frame experimentation tradeoffs in the context of reward maximization instead of minimizing the false positive rate.

- Increasing confidence through standardization: In this section we will highlight that there are better ways of increasing trust and reducing inflated Type I error that don’t rely on conservative rule-of-thumb thresholds.

Why experimentation velocity matters

We can build a simple simulation model to understand the impact of velocity on experimentation. Let’s assume there are two start-ups that compete in an identical industry. Each startup is led by CEOs who have somewhat divergent views on experimentation.

- High-trust CEO comes with a strong research bias. This means that all experiments need to be carefully planned, and findings are valid only if they pass the conventional threshold p-value of 0.05. Note that this CEO is the baseline for how decision-making is done by teams that use experimentation in both industry and academia.

- High-velocity CEO values flexibility and cares not only about the validity of experiments, but about the speed of iteration and business impact. Because all experiments have directional hypotheses, a one-side threshold of p=0.05 is considered good enough. Moreover, any experiments that have the treatment effect with one standard error pointing in the opposite direction to the hypothesis get terminated early (i.e., we are allowed to peek once mid-way through our target sample size and make a continue/discontinue decision).

The two CEOs care about a single metric, which is order-revenue-per-user that stands at $30. We will assume that 20% of the true effects tested have a negative impact, 60% lead to no impact, and the remaining 20% lead to a positive impact. Moreover, we assume that all experiments have preset Type I and Type II error rates of 5% and 20% respectively. Under a constrained environment, where these CEOs have more ideas they want to test than experimental bandwidth, which CEO is likely to have a higher return on experimentation?

In our simulation, the one-time peeking and one-sided alpha threshold would lead to a much higher false positive rate than the alternative choice. If our goal is to minimize the false positive rate, the high-velocity CEO has failed to accomplish that objective. Nonetheless, as Figure 2 shows, the high-velocity CEO is able to run 50% more experiments and achieve $1 more in real revenue impact. By making a tradeoff around p-values and early stopping criteria, the high-velocity CEO is able to get a higher reward even after accounting for the negative consequences of an increased false positive rate. This example of comparing high trust versus high velocity highlights that focusing on reward maximiward even after accounting for the negative consequences of an increased false positive rate. This example of comparing high trust versus high velocity highlights that focusing on reward maximization strategies in experimentation can dominate conventional criteria that minimize false positive rates.

Stay Informed with Weekly Updates

Subscribe to our Engineering blog to get regular updates on all the coolest projects our team is working on

Please enter a valid email address.

Thank you for Subscribing!

The benefits of maintaining high velocity

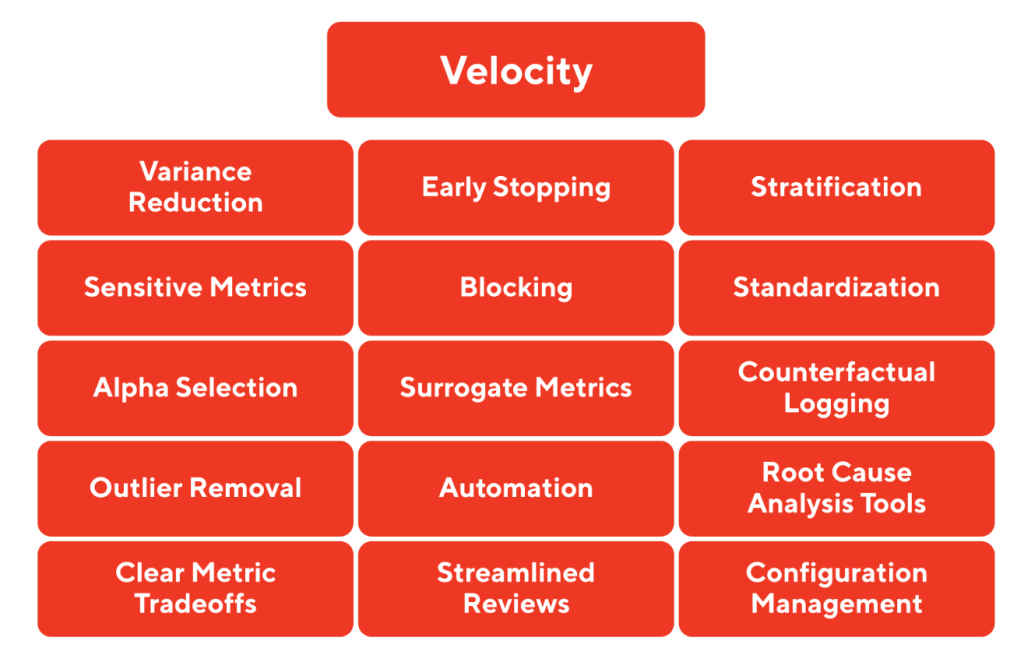

Velocity is a big reason why DoorDash so emphatically cares about variance reduction and its benefits. Nonetheless, it is more useful to think of velocity in the context of the overall experimentation lifecycle. Velocity is measured from the moment someone comes up with an idea to the moment they are able to make a decision based on experimental outputs, so focusing on multiple velocity initiatives outlined in Figure 3 can have high payoffs. Within DoorDash, among teams that optimize their workflows around experimentation velocity, we have observed the following strengths:

- Better metric understanding: Experiments provide a great opportunity to be more in tune with the drivers of company metrics, understand mediation effects, find instrumental variables, make tradeoffs, and run meta-analyses. We have consistently observed that people build better perspectives of their products and metrics and more empathy about their users because experiments are a forcing function for doing so.

- Greater reliability: If you launch an experiment once, you might occasionally have the codebase filled with magic numbers or undocumented forks, because you might tell yourself that “this is not going to be used or modified much”. If you’re required to launch dozens of experiments, you’re explicitly given a motivation to do a better job in setting up clear configuration files, automating repetitive tasks, and writing code that is more functional, decoupled, and refactorable. Experimentation forces better coding and architecture decisions because you’re always thinking about how to introduce flexibility to launch new changes faster, make modifications easier, and reduce the impact of harmful rollouts.

- Greater autonomy: To enable higher velocity, a company is required to trust experimenters to operate in a fail-fast and learn-fast culture. Individual teams are asked to handle themselves with ownership and accountability, and there is no general supervisory body through which decisions are bottlenecked. This autonomy is a great motivator for high-performance teams.

Velocity is not always the goal

The tradeoffs we make between velocity and trust are driven by the industry and by the cost of rolling out a treatment that might have a negative impact. Decisions around hiring, drug treatments, credit approval, or social policy require a higher burden of evidence. Similarly, decisions that are not easily reversible and require an increased maintenance burden should also need more evidence. Nonetheless, many industries and contexts don’t benefit from a decision process that prescribes conventional restrictions on velocity, and experimenters should be encouraged to focus on reward maximization instead of minimizing the false positive rate.

Moreover, the tradeoff between velocity and trust should be mitigated by how efficiently teams run and are using their experimental capacity. If a team runs one experiment when they have the capacity to run ten, there is a benefit to being more conservative because there is no opportunity cost from a delay in decision-making or slower idea iteration.

Why experimentation confidence matters

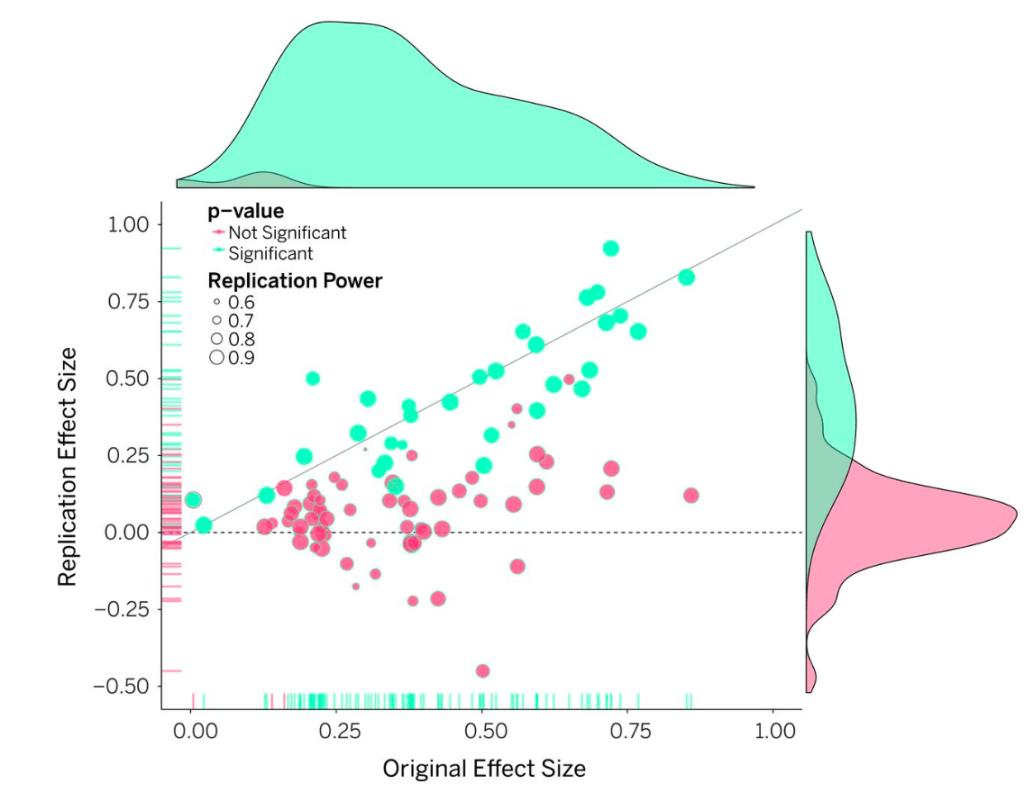

Failed replication is common in academic research settings, where the likelihood of being published in top journals is very low, articles go through multiple reviews, and there are high levels of scrutiny–yet “publish or perish” incentives are inevitable. For example, in 2015, Nosek et. al published a comprehensive replication study in the journal Science highlighting that among 100 prominent psychology experiments, roughly ~66% failed replication attempts (see Figure 4). Nonetheless in an industry setting, we can also be compromised by incentives, such as desire for recognition and for fostering good relationships with stakeholders around us. That human desire can bias us to seek outcomes or engage in practices that can lower trust in experimental outputs.

Figure 4: Two-thirds of experiments failed replication attempts or had effect sizes that were far smaller than what were observed in the original study.

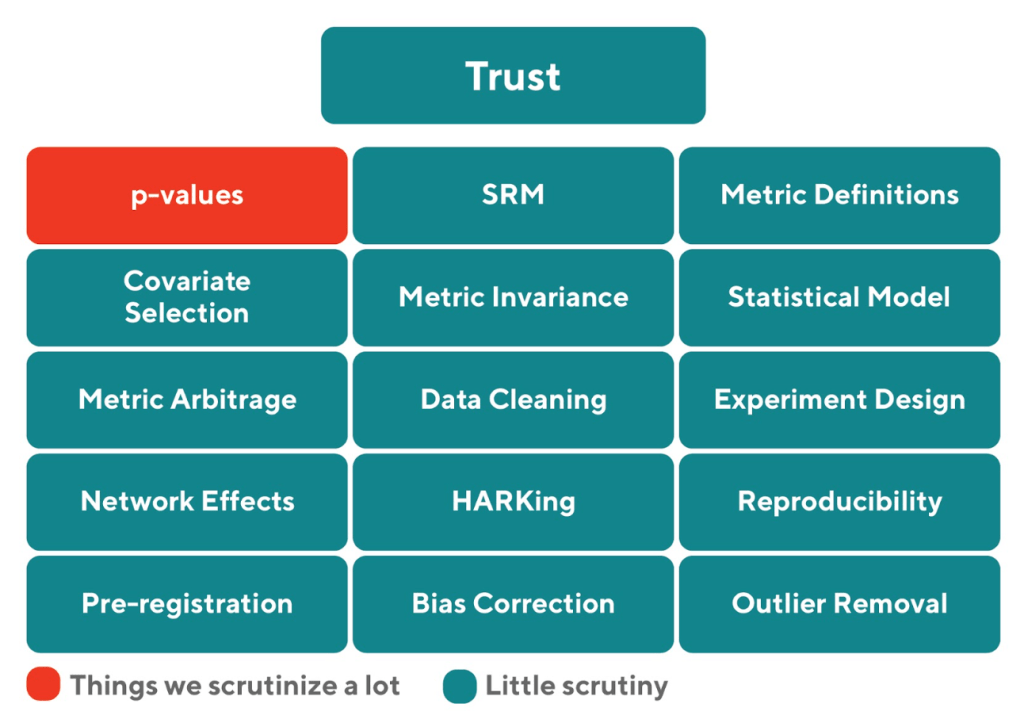

There are many factors that affect experimentation trust (see Figure 5). Although convention can push us to scrutinize specific things like p-values, the root of many experimentation issues has to do with not putting things down in writing things like what the hypothesis is, how to test it, what your metrics will be, covariates, the experiment duration, the unit of randomization, and statistical model you’ll use to analyze the data before experiment launch. Recording these factors has a tremendous impact in reducing researcher degrees of freedom, getting more precise feedback from stakeholders, and reducing p-hacking. Any person can look at the analysis and compare it to the experiment design and call out inconsistencies in planning.

In this section, we will highlight two topics that affect trust and are particularly challenging to solve at scale:

- Preventing metric arbitrage and

- Ensuring metric invariance.

How metric arbitrage eliminates experiment wins

Metric arbitrage happens when a team has a primary and a guardrail metric. The team wants the primary metric to be positive and statistically significant and for the guardrail metric to stay flat or generate no deterioration. Metric arbitrage specifically affects large organizations where teams operate in a decentralized context. As companies scale and grow, entropy increases. In order to remain productive and ensure increased focus, teams that own a large area of the product get subdivided into separate teams that sometimes have competing priorities. For example, we might have the following subteams:

- Team Growth has the goal of adding more users under some efficiency constraint represented by $cost/user. The team is willing to spend at most $10 to acquire one new consumer. If they acquire a consumer under that $10 guardrail, they are doing great.

- Team Pricing focuses on profitability and is responsible for pricing the product. They are willing to save at least $10 even if it means losing one consumer.

Both teams operate from the same tradeoffs, which in itself is very rare. Nonetheless, even if teams operate from fundamentally the same tradeoffs, they might engage in many actions to make the primary metric more likely to pass statistical significance while the guardrail metric is deemed as not statistically significant. For example:

- Team Growth might ship a promotion that will bring more users with a p < 0.01, but will ignore the potential increase in costs to $15/user because p-value is 0.2.

- Team Pricing might increase prices, which will bring an incremental $1/user, but will ignore the potential risk of a decrease in user growth because p-value is 0.3.

Although the outcomes described above might seem incongruous, given that the variance of a metric across teams should not change, these types of outcomes can be easily achieved simply by deciding to apply variance reduction selectively only on the primary metric and not on the guardrail metric (see Figure 6). When we apply variance reduction, we reduce the standard error around the primary metric while keeping the standard error for the guardrail metric wide.

As companies become larger, having a shared understanding of metrics and tradeoffs becomes paramount. Even if you have top-notch experimentation tooling, research methods, tracking, and telemetry, if teams are allowed to operate without metrics consistency and alignment, arbitrage can completely eliminate the benefits of running experiments since teams cancel out each other's improvements. Although there are statistical approaches that focus on equivalence testing that specifically deal with testing for the absence of evidence, the core problems are driven by a lack of standardization and researcher degrees of freedom. Within the Experimentation platform, we attempt to reduce metric arbitrage by having a good integration with our internal Metrics platform. To reduce arbitrage, we specifically focused on the following:

- Standardized metric definitions. The first solution is to make sure that teams use a set of shared metric definitions. It is very easy during the process of running an experiment to reinvent definitions or adjust them slightly to your specific subteam. Although experimenters should be allowed to define new metrics or redefine metrics, since that process encourages exploration of new ideas, stakeholders need to clearly be able to see that the new metric definitions are inconsistent with standardized metrics used across the company.

- Always on guardrails. The second solution is to make sure that when an internal user launches an experiment, they are not allowed to opt out from tracking a set of guardrail metrics that the majority of stakeholders within the company care about.

- Metric insights. This third solution is to expose to teams how tradeoffs are being made within the platform by reporting on historical experiments. For example, we can allow stakeholders to filter on a specific metric and see all historical experiments that were launched that affected that metric. They would then be able to drill down to experiments where the metric was negative and ask different subteams what made them make a launch decision that led to a detrimental metric impact. By increasing this transparency, internal teams can have a more open debate around tradeoffs.

The integration with a Metrics platform allows teams to more efficiently and transparently communicate how they make tradeoffs or surface cases when teams compete against each other on a set of priorities. We hope to showcase the work on the Metrics platform in a future blog post.

How lack of metric invariance affects generalizability

We commonly assume that water boils at a temperature of 100 °C (212 °F). Yet if you run multiple experiments under different conditions, you'll find that this assumption does not generalize. Instead, atmospheric pressure often affects the boiling temperature of water, with increased elevation seeing a lower boiling point. This is why at the top of mount Everest, water boils at 70° Celsius, and pressure cookers are becoming a staple appliance in kitchens due to how efficiently they can speed up the cooking process. The inconsistent relationship between temperature and boiling point for water is an example of a metric lacking invariance. In one experiment you might be able to establish a relationship between X and Y, but that relationship changes based on many mediator variables present in the environment. If your environment changes, you have to adjust the assumptions you made.

We find a lack of metric invariance in a large portion of our experiments.

- A seasonal cuisine carousel is very impactful in driving a higher order rate, but it requires one to correctly match and regularly update the carousel to the relevant season. Providing users a carousel of ice-cream offerings during winter months because an experiment in summer months showed higher conversion would likely be a miss.

- We might assume that if we improve how quickly a page loads, we will see increased revenue. Nonetheless, time is a reality perceived by the user and there are perceptual and cognitive limits below which users are likely not sensitive to changes in page load times. Therefore, you might find that a 0.2s improvement doesn't translate to any meaningful impact when your latency is already perceived to be fast.

- We might build a more efficient lever for managing supply-demand, yet the presence of healthy supply means that this lever rarely gets deployed, which leads to flat metrics. In an undersupplied environment, the lever could be very impactful.

- We might find that a new UI drives engagement up, but long-term impact stays flat due to novelty effects.

The best solution to this problem is to view experiments as a learning opportunity, regardless of the outcome of a specific experiment. This scrutiny and exploration have several benefits.

- They allow us to increase the success rate of experimental launches. If a team has a success rate of 20% for a first experiment launch, that number can often double after a relaunch because the first experiment instructs us how to adjust the treatment in the follow-up experiments.

- All experiments provide meaningful lessons and serve as a rich source of potential projects. The magic of experimentation is that every time you complete one experiment, you're often left with enough ideas to follow up with two or three other treatments.

- Lastly, follow-throughs can allow us to understand under what conditions our treatment will generalize. For example, when we build levers for addressing supply-demand imbalance, we might ship flat global results if we have enough evidence to suggest that the treatment effect increases as a function of undersupply in the market.

Overall, for any experimentalist, it is worth investing the time in getting familiar with some of the causal inference tooling that goes beyond simple ATE estimation (see Imai, King, & Stuart, 2008).

Conclusions

A successful experimentation paradigm requires one to operate with high trust and high velocity. Unfortunately, those two often compete against each other. We generally recommend focusing on the following to help balance the two.

- If you have a long backlog of ideas and high execution capacity yet experiments take longer to run, focus on things that improve velocity: variance reduction, higher alpha thresholds, sequential testing, standardization and automation, and rapid experiment review process. Sometimes this can lead to a higher false positive rate, but as long as the team makes more experimental bets, they are likely to maximize experimentation impact.

- If you notice a lack of metric consistency, replication failures, or a tendency to rationalize any experiment result, then focus on standardization by asking experimenters to pre-register their proposals, carefully track metrics, and be very explicit about how they make decisions ahead of experiment launches. The best way of mitigating the Type I error is by adopting better research practices rather than by compensating through more conservative thresholds.

If you are passionate about building ML applications that impact the lives of millions of merchants, Dashers, and customers in a positive way, consider joining our team.

Acknowledgements

Thanks to Jared Bauman and Kurt Smith for feedback on this article and the overall Experimentation team for engaging discussions about how to maximize experimentation impact.