In a microservice architecture, cross-service communication happens under a set of global rules that are hard to effectively enforce across all services without standardizing client-service communication. Relying on individual service client implementations to adhere to these rules means a lot of additional repeated work on individual teams, which has a negative impact on developer velocity. Furthermore, when an individual implementation strays from a rule by mistake, the effect is often severely adverse on overall reliability.

In this article we will explain how DoorDash elevated developer velocity and cross-service reliability through standardizing client-service communication, by specifying a set of gRPC client requirements, and building them into Hermes, our new standard gRPC client library.

The challenge of managing a highly distributed architecture

Modern microservice architectures have some advantages over monolithic architectures in terms of improved separation of concerns, scalability, and developer velocity. However, one of the greatest challenges that comes with a microservices architecture is maintaining a homogeneous set of standards that transcends service boundaries. Such standards are usually not about how services are implemented, but more about how they communicate with each other. Such standards include:

- Service discovery and load balancing: It is important for all services to agree on how they will discover each other’s addresses and balance the load among these addresses. For instance, a rule stating that service discovery is DNS-based, and load-balancing happens on the client side, eliminates the server-side load balancer bottlenecks, but the rule needs to be enforced.

- Enforcement of reliability guardrails: A service should have the right to dictate how it wants its clients to behave in terms of timeouts, retries, and circuit breaking criteria. These guardrails ensure that outages are isolated, and that recovery is fast.

- Cross-service context propagation: A user request usually comes with headers that carry important information and should be visible to all services involved in fulfilling that request. Headers with global semantics are assumed to be propagated by all clients to their outgoing requests to other services.

- Service-to-service authentication: All clients across all services are assumed to comply with a global standard in terms of how to authenticate to their dependency services. This global standard is usually ratified by a security team, and its automation mitigates security breaches.

- Observability of communication: All clients are assumed to instrument the same metrics and log the same information about outgoing requests and their responses.

How can client-service communication standards be enforced?

One way to try to enforce these standards is to document them well and rely on engineers to implement them in the services they own, and to verify them in code reviews. However, engineers make mistakes, which can cause severe consequences. Additionally, relying primarily on teams to enforce standards is not practical when teams should be focused on building new features.

Another way to enforce these standards is to bake them right into the protocol client library. This dramatically decreases the cognitive load needed for service owners to integrate their services, virtually eliminating the odds of making mistakes, which adds a huge boost to reliability and developer velocity.

Enforcing reliability guardrails in the client library

After a number of outages and service failures, DoorDash turned its focus to cross-service reliability guardrails. These past outages were either caused or amplified by service clients behaving inappropriately during an incident. Examples include:

- A client has a request timeout set arbitrarily long, causing client thread saturation when the service is going through an incident.

- A client persistently retries failing requests when it should back off, overwhelming the service when it is trying to recover.

- A client keeps on sending requests when the service is evidently failing across the board, instead of breaking the circuit and failing fast, causing cascading failures.

The ideal solution is to build a client library that seamlessly enforces client-service reliability guardrails. This library must give services the ability to define the reliability behavior of the clients that call them. This is done by configuring the parameters of the clients’ timeouts, retry strategy, and circuit breakers.

- If set correctly, timeouts are the first line of defense for reliability. If set too long, client threads can be easily saturated in an outage. If set too short, requests are abandoned prematurely. Services should own the timeout values used by the calling clients, since it is tightly related to the service latency.

- Seamless retries with exponential backoff and jitter is the next line of defense. It ensures that transient errors have limited effect. How long the client should wait between retries, how many attempts should be made, and which error codes are retriable, all depend on the service implementation. Therefore services should also control this aspect of client behavior.

- Circuit breaking is the last line of defense. If an outage happens and the service failure rate spikes, it makes sense for the client to detect the spike, skip outgoing requests altogether, and simply fail fast for a while. This allows the service a chance to recover without a long request queue. What constitutes a break-level failure rate depends on the service, and that is why clients’ circuit breaking configurations should also be dictated by it.

Most of the involved standards are well-defined industry concepts. Therefore, rather than implement these concepts from scratch, which would take months of effort, it is better if we can rely on a mature open source base library and modify it to our needs. This will allow us to solve the problem in a much shorter time frame since we can build features on top of the library rather than start from scratch.

Client behavior as a part of the service contract

At DoorDash, we rely on gRPC as the standard protocol for communication among our microservices. To build the solution described above, we designed and implemented a standard gRPC client library, which we call Hermes internally. In Greek mythology, Hermes (Pronounced “hermeez”) is the god of boundaries, communication, and travel. He is the messenger of the gods. He is able to move quickly and freely between the worlds of the mortal and the divine, aided by his winged sandals.

Our first step was to find a good way to enable services to set client parameter values for timeouts, retry, and circuit breaking, and make this step a core part of setting up the connection.

An option we found was to rely on the standard gRPC service config. This meant we needed to implement a thicker DNS that would allow services to register these values, and would add the service configuration as part of its query responses. We decided this change would consume too many resources to implement, and we wanted to avoid it if possible.

Our next thought was that, as we were already using protocol buffers (protobuf) to represent the service interface, perhaps we should extend its use to define other aspects of the service contract, such as these reliability parameter values. After some research, we shaped an elegant solution using protobuf custom options. We decided to define these parameters as a common protobuf extension for protobuf services.

package service_client;

message ServiceClientConfig {

int64 response_timeout_millis = 1;

...

}

extend google.protobuf.ServiceOptions {

service_client.ServiceClientConfig client_config = 999999;

}Any service can use this extension, enabling it to annotate the service with protobuf options that declare values for these parameters.

import "service_client_config.proto";

service GreetingService {

option (service_client.client_config).response_timeout_millis = 800;

...

rpc hello(HelloRequest) returns (HelloResponse);

...

}These custom options have standard semantics, and the client library can pull their values from the service descriptor at connection establishment time using reflection.

fun getServiceTimeout(serviceDescriptor: ServiceDescriptor) =

serviceDescriptor

.options

.getExtension(ServiceClientConfigProto.clientConfig)

.responseTimeoutMillis;This way, service owners can add these simple options as annotations to their service definition, and our standard client library would be able to seamlessly enforce them.

Searching for the perfect open source base library

Next we set out to find the best open source library that would cover most of our following requirements:

DNS-based service discovery and client-side load balancing. We had already decided that server-side load balancers constitute bottlenecks, and had started moving away from them. Some services had already implemented client-side load balancing by relying on the server-side load balancer merely to establish a long-lived connection pool, and rotating among these connections. This solution had the downside of being complicated to manage, since established connections need to be kept in sync with the server’s changing horizontal scale.

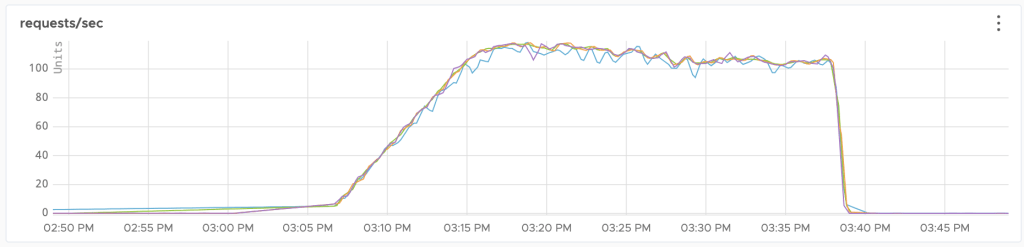

To ensure the clients refresh the connections when the service’s horizontal scale changes, the server used to periodically close all the client connections, so that clients would reestablish them with the new scale. This pattern led to a fuzzy, uneven load distribution, which hurts capacity planning. Figure 1, below, shows an example of load distribution under this arrangement.

Therefore, the new requirement is that clients should be able to seamlessly rely on a DNS for service discovery, and that they should do client-side load balancing while automatically honoring the DNS TTL to periodically refresh the connection pool.

Configurable reliability APIs. The base library must have mature, configurable, ready-to-use implementations for retries and circuit breakers, as these concepts are too complicated and too widely used to implement from scratch.

Extensibility. The base library must be highly extensible, so that we can easily add DoorDash’s specific requirements involving standards described above, like observability, context propagation, and service-to-service authorization, with minimum friction.

After some research we decided on Armeria as our top candidate. Armeria, built by LINE Corporation and released as open source under the Apache 2.0 license, is a microservice framework powered by a very strong open-source community. It is built in Java, which fits very nicely in our Kotlin environment. It covers all the required features: DNS service discovery and client-side load balancing, along with native timeouts, retries, and circuit breakers. It is also highly extensible through the use of an elegant decorator pattern, which allows us to add all of our custom aspects to all outgoing requests. Figure 2, below, depicts the architecture of our standard gRPC client.

Filling the gaps through open source contributions

A problem we faced fitting our requirements through Armeria is that we wanted services to be able to vary parameter values across methods within the same service. Armeria APIs for retries and circuit breaking only provided the means to set these parameter values at the level of the whole service, not at the level of the method. We contributed these features to Armeria by submitting pull requests that add them to retries and circuit breaking. Our collaboration experience with the Armeria core team was extremely pleasant. We added features to vary retry config and circuit breaking config by gRPC method, which came out with Armeria 1.3.

Results

Our initial results showed better load distribution and tail latency. Relying on DNS for service discovery directly resulted in even load distribution, which is great for effective capacity planning. Load distribution becomes even because DNS records come with a short TTL, after which the client gracefully refreshes the list of connections by redoing the DNS query, eliminating the need to periodically force connections to close based on their age. Figure 3, below, shows an example load distribution under this arrangement.

Like load distribution, tail latency also got better. The periodic connection reestablishment used to come in sync with a slight periodic increase in tail latency. This periodic increase disappeared once we shifted to DNS-based service discovery, because connections are no longer terminated based on their age. Figure 4, below, shows a tail-latency comparison between the old client and our new Hermes-based client.

Other than performance improvements, the new standard gRPC client now gives several benefits:

- We gain more clarity and consistency about the client behavior in outage situations. Since all reliability configurations are centralized in the service definition, the odds of client misbehavior due to wrong configuration are minimal, and there is no confusion about how the client is reacting to failures, making it easier to reason whether or not it's contributing to the problem.

- Cross-service features that are context-dependent became a lot easier to roll out. Since Hermes hides context headers propagation from the developers, a new context header does not need to be individually implemented in each service contributing to the feature.

- Consistent standard observability among all services means that virtually any backend developer can understand logs and metrics from any service.

- Packing the library with all the standard features like standard timeouts, automated retries, circuit breaking, logging and metrics, context propagation, and service-to-service authentication, relieves the service developers from implementing them everywhere, which allows them to move faster.

In general, the grand result is better service reliability and higher developer velocity.

Conclusion

In any environment which employs a microservice architecture, having a consistent service client standard is key to elevating overall reliability and developer velocity. By implementing the standard as a part of the service contract, with the help of Armeria as a base library, we were able to release a gRPC client library in a very short time, seamlessly enforcing a standard behavior, allowing client developers to write less code and make less mistakes, and allowing service owners to consistently control the reliability parameters of all their clients. This helps us decrease outage frequency due to human error and decrease our mean time to recovery, in addition to allowing developers to move much faster. For any organization that relies on gRPC microservices, this process can help achieve better reliability and developer velocity.