In 2019, DoorDash’s engineering organization initiated a process to completely reengineer the platform on which our delivery logistics business is based. This article represents the first in a series on the DoorDash Engineering Blog recounting how we approached this process and the challenges we faced.

In traditional web application development, engineers write code, compile it, test it, and deploy it as a single unit to produce a functional service. However, this approach becomes more challenging for a site under continuous use by millions of end-users and constant development by hundreds of engineers.

DoorDash’s platform faced a similar reckoning. Originally developed as a monolithic codebase, the company’s business growth in 2019 unveiled the weaknesses of our development model, including issues such as growing developer ramp up time, longer waits for test completion, and overall higher developer frustration as well as increased brittleness of the application. After some debate, the company began planning to transition the monolith to a microservice architecture.

Engineering teams have adopted microservices in many contexts where scaling web services with high traffic is critical for business. Essentially, the functions of the monolithic codebase are broken out into isolated and fault-tolerant services. Engineers end up handling the lifecycle of smaller objects, making changes easier to understand and thus less prone to mistakes. This architecture allows for flexibility on deployment frequency and strategy.

Making this change at DoorDash required an all hands on deck approach, going so far as to halt all new feature development in late 2019 so that the company could focus on building a reliable platform for the future. While the extraction of business logic from the monolith is still ongoing, our microservice architecture is up and running, serving the millions of customers, merchants, and Dashers (our term for delivery drivers) who order, prepare, and deliver on a daily basis through our platform.

Growing the business

DoorDash began its venture into food delivery in 2013. At that time, the mission from an engineering standpoint was to build a fast prototype to gather delivery orders and distribute them to a few businesses through basic communication channels like phone calls and emails. The application would need to accept orders from customers and transmit those orders to restaurants while at the same time engaging Dashers to pick up orders and deliver them to customers.

The original team decided to build the DoorDash web app using Django, which was, and still is, a leading web framework. Django proved to be a good fit for achieving a minimum viable product in a short amount of time with a small group of developers. Django also provided ways to quickly iterate on new features, which was a great asset in the early days of the company since the business logic was constantly evolving. As DoorDash onboarded more engineers, the site increased in complexity and the tech stack began to consolidate around Django. With agility as the number one goal, the DoorDash engineering team kept iterating on this monolithic system while building the foundations of the business and extending the application’s functionality.

In the early years, building the web application with a monolithic architecture presented multiple advantages. The main advantage was that working on a monolith reduced the time-to-market for new features. Django provided the engineering team with a unified framework for the frontend and backend, a single codebase using a common programming language, and a shared set of infrastructure components. In the beginning this approach took the DoorDash web application far because it allowed developers to move quickly to enable new features for customers. Having the entire codebase in a single repository also provided a way to wire new logic to the existing framework and infrastructure by reusing consolidated patterns, thus speeding up our development velocity.

The cost of deploying a single service both in terms of engineering and in terms of operations was contained. We only had to maintain a single test and deployment pipeline, and a limited number of cloud components. For example, one common database powered most of the functionalities of the backend.

In addition to operational simplicity, another benefit of the monolithic approach was that one component could call into other components without incurring the cost of inter-service network latency. A monolithic deployment removed the need to consider inter-component API backward compatibility, slow network calls, retry patterns, circuit breaking, load shedding strategies, and other common failure isolation practices.

Finally, since all of the data lived in a single database, requests that required information from multiple domains to be aggregated could be efficiently retrieved by querying the data source with a single network request.

Growing pains

Although the monolithic architecture was a valid solution to enable agile development in the early phases, issues started emerging over time. This is a typical scenario in the lifecycle of a monolith that occurs when the application and the team building it cross a certain threshold in the scaling process. DoorDash reached this point in 2017, which was evident by the increasing challenge of building new functionalities and extending the framework.

Eventually, the DoorDash application became somewhat brittle. New code sometimes caused unexpected side effects. Making a seemingly innocuous change could trigger cascading test failures on code paths that were supposed to be unrelated.

Unit tests were also being built with increasing inattention to speed and best practices, making them too slow to run efficiently in the continuous integration pipeline. Running a subset of tests in the suite to validate a specific functionality was challenging to do with high confidence because the code became so intertwined that a change in one module could cause a regression in a seemingly unrelated area of the codebase.

Fixing bugs, updating business logic and developing new features at DoorDash now required a significant amount of knowledge about the codebase, even for relatively easy tasks. New engineers were required to assimilate a massive amount of information about the monolith before being efficient and comfortable with daily activities. Also, the increasing number of changes included in each deployment meant that each time we had to rollback because a new change caused a regression had a larger detrimental effect to the engineering team’s overall velocity. The increased cost of rolling back changes forced the release team to frequently run hotfix deployments for mission critical patches so we could avoid another rollback.

Furthermore, no significant effort was made to prevent different areas of code from living together in the same modules. Not enough safeguards were put in place to prevent one area of the business logic from calling a different area, and the modules were not always clearly partitioned. The vast majority of the issues we experienced while coding and deploying the software were a direct result of lack of isolation between the distinct domains of the codebase.

The tech stack was also starting to struggle as we were seeing increasing traffic to our platform. Stability issues emerged when new hires who were not accustomed to large Python codebases for a live application, began to introduce code. For instance, the dynamic typing nature of the language made it difficult to verify that changes didn’t have unforeseen effects at runtime. While Python did support type hinting at the time, the process of adopting it to the entire codebase would have been complicated because of the dimension of the problem faced.

Another problem we faced was that the monolith was written without any cooperative multitasking techniques. The adoption of these techniques would have been beneficial as it would mitigate the effect of I/O bound tasks on the vertical scalability of the system. It was not an easy task to introduce this pattern in the monolith given the potentially disruptive and difficult to predict impact of the change. Because of that, the number of replicas required to satisfy the growing traffic volumes increased significantly in a short period of time and our Kubernetes cluster reached the limits of its capacity. The elevated number of instances of the monolith would frequently cause our downstream caches and database to reach connection limits, requiring connection poolers such as PgBouncer to be deployed in the middle.

Another problem was related to database load and data migrations. Despite attempts to offload the database by creating separate databases for specific domains, DoorDash still had one single-master instance of PostgreSQL as the source of most of the data. Attempts to scale the instance vertically by adding more capacity and horizontally by adding more read replicas hit some limitations because of the technology used. Tactical mitigations, like reducing queries-per-second, were counterbalanced by the increasing amount of daily orders. As the database model grew, coupling was a major concern and migrations of data to separate domain-specific databases became more difficult.

Over the years, different attempts were made to address these issues, but these were isolated initiatives lacking a clear, company-wide vision in terms of scaling the site and the infrastructure. It was clear that we needed to decrease the coupling among domains and that we needed to build a plan to scale the software and team, but the main question was how to proceed.

The leap to microservices

DoorDash built its first microservice, Deep Red, which hosted functionality for logistics artificial intelligence (AI), out of the monolith in 2014. At that stage, there was no plan to restructure the architecture to be fully service-oriented, and this service was written in Scala mainly because Python was not a good fit for CPU-intensive tasks. Going forward we started building or extracting more services from the monolith. Our goal was to ensure that new services would be more isolated to decrease outages and simpler allowing engineering teams to ramp up development faster. Payment, point-of-sale, and order transmission services, among others, were also products of this initial phase.

However, in 2018 DoorDash began facing major reliability issues, forcing engineers to focus their time on mitigation rather than developing new features. At the end of the year, the company initiated a code freeze that was quickly followed by an engineering-wide initiative to tackle the problem. This operation was aimed at fixing specific reliability issues on different areas of the site, but did not include an analysis of why the architecture was so fragile.

In 2019, Ryan Sokol, the new VP of engineering for DoorDash, started a profound reflection on three areas of our software engineering:

- Architecture: monolithic versus microservice-based

- Tech stack: programming language (Python versus Java Virtual Machine), inter-service communication (REST versus gRPC), and asynchronous processing (RabbitMQ versus Apache Kafka/Cadence)

- Infrastructure: organization of the infrastructure in order to scale the platform and the engineering team

At that time, the architecture used a hybrid approach, with some subsystems being migrated on microservices with no common tech stack and with the monolith still at the center of all the critical flows.

The reasons why DoorDash began evaluating a re-architecture of the software were multifold. First and foremost, we were concerned about the stability of the site. Reliability became the first priority for engineering as we were going through the decision-making process around how to move forward with the re-architecting initiative. On the other hand, we were concerned about the isolation of our different business lines, such as our signature food delivery marketplace and DoorDash Drive, that were already consolidated, but also interested in the possibility of building new business lines in isolation by leveraging the current functionality and platforms. At the same time, the company was already building an in-depth knowledge on how to operationalize mission-critical microservices in multiple domains, including logistics, order transmission, and the core platform.

There were multiple advantages to having a microservice architecture that we were looking to utilize. On one hand, DoorDash strived for a system where functionalities were built, executed, and operationalized in isolation, and where service failures were not necessarily inducing system failures nor causing outages. On the other hand, the company was looking for flexibility and agility in launching new business lines and new features, while being able to rely on the underlying platforms to achieve reliability, scalability, and isolation.

Furthermore, this architecture allowed for different classes of services, including frontend, BFFs (backends for frontend), and backend, to be built with different technologies. Last but not least, the company was looking for an organizational model where scaling the engineering team with respect to the architecture was feasible. In fact, the microservice architecture allowed for a model where smaller teams could focus on specific domains and on the operations of the corresponding sub-systems with a lower cognitive load.

To reiterate, the reasons why we moved to a microservice-based architecture were:

- Stabilization of the site

- Isolation of the business lines

- Agility of development

- Scaling the engineering platform and organization

- Allowing different tech stacks for different classes of services

Defining these requirements helped us determine a thoughtful and well-planned strategy for a successful transition to a microservice architecture.

Making the transition

Moving out of the monolith was not an easy call for DoorDash. The company decided to start this process during a historical phase where the business was experiencing unprecedented growth in terms of order volume, new customers, and new merchant onboarding, along with the launch of new business lines consolidating on the platform.

We can identify four separate phases of this still-ongoing journey:

- Prehistory: before the strategic launch of microservices

- Project Reach: addressing critical workflows

- Project Metronome: beginning business logic extraction

- Project Phoenix: designing and implementing the holistic microservice-based platform

Prehistory

In this phase, from 2014 until 2019, DoorDash built and extracted services out of the monolith without a specific vision or direction to how these services were supposed to interact and what would have been the common underlying infrastructure and tech stack. The services were designed to offload some of the functionality of the monolith to teams that were working on domain-specific business logic. However, these services still depended on the monolith for the execution of some workflows and to access data from the main database. Any failure or degradation of the monolith heavily affected the workflows powered by these “satellite” services. This behavior was caused by the fact that the satellite services were not designed to work in isolation mainly because of the lack of a cohesive strategy on the future of the software architecture.

Project Reach

In 2019 the company started the first organized effort to address the problem of re-architecting the codebase, the infrastructure, and the tech stack. Project Reach’s scope required that we address a set of critical functionalities and to begin the extraction of the corresponding code into new services. The first efforts were focused on the business-critical workflows and on the code extractions that were already in progress from the previous phase. Another achievement of Project Reach was to begin standardizing the tech stack. In this phase, DoorDash adopted Kotlin as the common language for backend services and used gRPC as the default remote procedure call framework for inter-service communication. There were also some efforts to move out of PostgreSQL in favour of Cassandra in order to address some scalability issues. The project was carried out by a small task force of engineers with the help of representatives across different teams. The main goals were to raise the engineering team’s awareness of why a re-architecture was needed and to start a process of systematic code extraction from the monolith.

Project Metronome

Project Reach laid the technical foundation for the new microservice architecture, consolidated new patterns of extraction, and proved that migrating off the monolith was possible and necessary. Project Reach’s impact was so profound that the engineering team was able to get buy-in from upper management to focus the effort on the re-architecture and extraction work for all of the fourth quarter of 2019 . For the whole duration of Project Metronome, a representative from every team was committed to following up closely on the extraction works for that domain. Technical program management was heavily involved in formally tracking the progress against the milestones that were identified at the beginning of the project. During this quarter, the extraction process changed pace and DoorDash was able to make significant progress on the extraction of some of the critical workflows and in some cases to complete the analysis of the remaining functionality to be extracted.

Project Phoenix

Thanks to the momentum generated by Project Metronome on the extraction effort and the in-depth knowledge accumulated over the course of 2019 on the functionality to be extracted from the monolith, we began a rigorous planning phase that served the two-fold purpose of identifying all the workflows still orchestrated by the monolith and determine the final structure of the microservice mesh. This planning phase also aimed to define all the upstream and downstream dependencies for each service and each workflow so that the teams were able to follow up closely with all the stakeholders during the extraction and rollout processes. Data migrations from the main database to the domain-specific databases were also included in the planning as part of the requirements to retire the legacy architecture.

Each effort required to complete the extraction process was formalized into milestones and each milestone was categorized into three tiers in order to prioritize the execution. After this planning phase, a significant portion of the engineering team was dedicated to completing the extraction work, starting with the most critical workflows for the business.

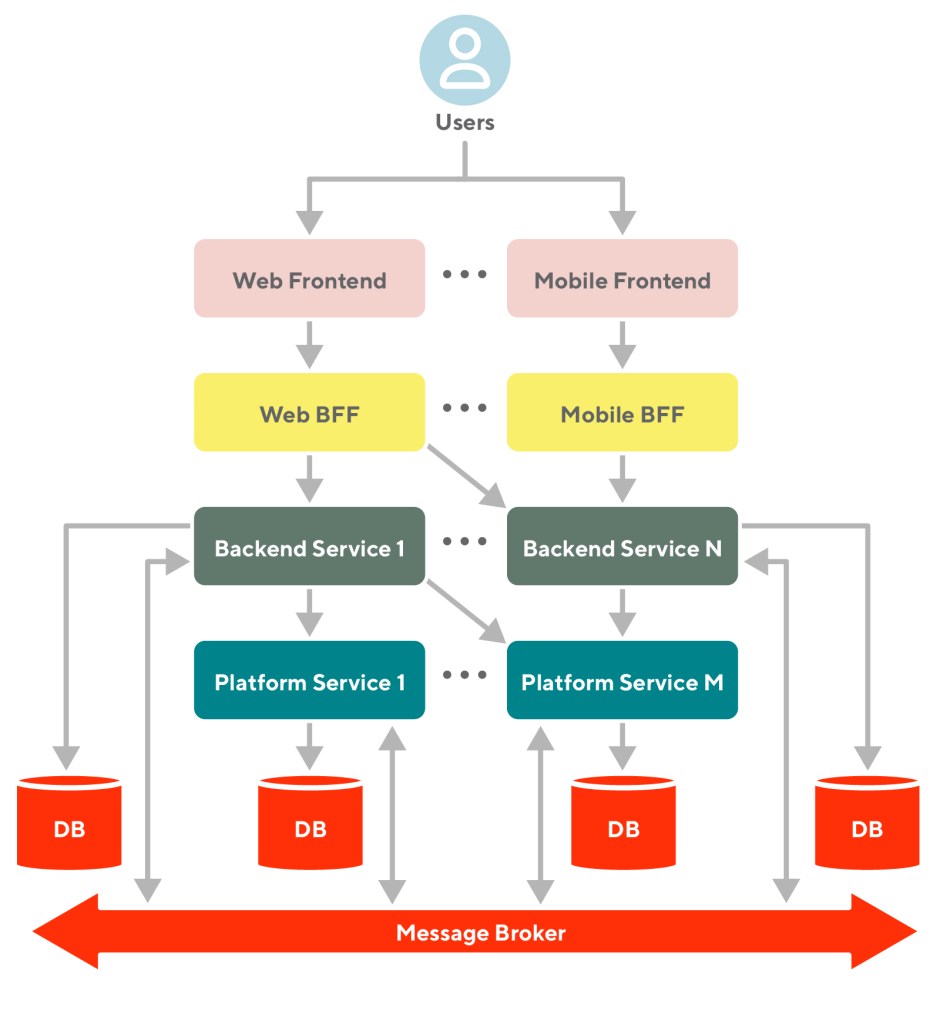

After these phases, a multi-layered microservice architecture emerged:

- Frontend layer: Provides frontend systems (like the DoorDash mobile app, Dasher web app, etc) for the interaction with consumers, merchants, and Dashers that are built on top of different frontend platforms.

- BFF layer: The frontend layer is decoupled from the backend layer via BFFs. The BFF layer provides functionality to the frontend by orchestrating the interaction with multiple backend services while hiding the underlying backend architecture.

- Backend Layer: Provides the core functionality that powers the business logic (order cart service, feed service, delivery service, etc).

- Platform layer: Provides common functionality that is leveraged by other backend services (identity service, communication service, etc).

- Infrastructure layer: Provides the infrastructural components that are required to build the site (databases, message brokers, etc) and lays the foundation to abstract the system from the underlying environment (cloud service provider).

The main challenges of re-architecting our platform

DoorDash faced multiple challenges during this re-architecture process. The first challenge was to get the whole engineering team aligned on the strategy of moving to microservices. This alignment was not an easy task, as years of work on the monolith contributed to a lot of inertia in the engineering team with respect to the extraction effort. This cognitive bias, known as the IKEA effect, was part of the reason why DoorDash needed new blood to execute this endeavor. The company proceeded with a disagree and commit approach, where a phase of debates on different topics was followed by a commitment from the engineering team as a whole on the overall strategy that had been decided upon.

Having all hands engaged on the re-architecture effort required the evangelization of new reliability patterns that was unprecedented for the company. A big effort was put in place to promote reliability as the foundational property of the new system that the team was about to build. In addition to focusing on reliability our team also had to emphasize isolation, which had not been a factor when working on the monolith. With respect to this matter, the core issue was to contain the use of anti-patterns while looking for a reasonable compromise between agility of the extraction and technical debt.

The main challenge that the engineering team faced was to define new interaction surfaces among the newly formed services and proceed with extracting the functionality, as opposed to just extracting the code. This task was particularly hard because the team had to do it while moving to a different tech stack and juggling new feature development tasks. In fact, it was not possible to operate in a code-freeze state for the whole duration of the extraction, as new functionalities were still necessary for the business to grow and adapt to the changing needs of customers, merchants, and Dashers.

Also, as code extraction was carried out at different paces across domains, service adoptions were difficult to handle. In fact, the first code extraction efforts didn’t have all the downstream dependencies available to completely migrate to a microservice-based architecture. Therefore, the teams had to put in place temporary solutions, such as resorting to direct database access, while waiting for the APIs to land on the corresponding services. Even if this anti-pattern allowed for progress on the extraction, table migrations became more difficult to achieve as we didn’t have a clear and well-defined access pattern to the data. Temporary APIs were used to mitigate this issue, but that approach was increasing the overall technical debt. Therefore, it was critical to make sure that adoptions were constantly monitored and carried out as new extractions were successfully implemented.

Lastly, one of the most complex efforts that was put in place was the data migration from the main database to the newly formed service-specific databases without disrupting the business. These migrations were as important as they were complex and potentially disruptive, and building the foundation for the first migrations required multiple iterations.

It is worth noting that all these efforts were unfolding while DoorDash was experiencing a significant order volume growth due to the effects of Covid-19 on the food delivery market. While we were performing the code extractions and database migrations we had to make sure that the current architecture was able to handle the increasing load. Juggling all these elements at the same time was not an easy task.

Hitting our goals

In the 16 months since Project Reach’s inception, DoorDash has made huge progress on the path to reaching a full-fledged microservice architecture and of being able to retire the monolith and the infrastructure wired to it.

We are working to move all of our business-critical workflows into microservices and plan to freeze the monolith codebase, with few exceptions for high priority fixes. New product features are currently being developed on the new microservices. The infrastructure team is focused on powering this new architecture and on making it flexible enough to support any new business lines which might launch in the future.

Also, most of the critical tables were successfully extracted from the main database into microservice-specific databases, thus eliminating a major bottleneck from the architecture. The load on the main database has been proportionally decreased with respect to the organic increase of requests due to the business growth.

With the new architecture, DoorDash is powering roughly 50 microservices, among which 20 are on the critical path, meaning their failure may cause an outage. By adopting a common set of infrastructural components, a common tech stack, and by loosely coupling most of the interactions among services, the company achieved a level of reliability and isolation that pushed the uptime indicators to levels that were not achievable with the monolith. The organization is converging to a structure where small teams of domain experts have acquired the knowledge to scale, operate, and extend each component. While these results have been outstanding there are still several challenges ahead. In the upcoming articles in this series we will discuss some of the challenges we faced implementing microservices including having to deal with rigid data contracts, the hurdles of adding and operating new services to the platform and having to deal with failures across service boundaries.