Overview

- Introduction

- What is the assignment problem at DoorDash?

- What is reinforcement learning?

- Reinforcement learned assignment

- Moving forward

- Conclusion

Introduction

DoorDash recently held our thirteenth hackathon. Hackathons are our opportunity to explore new technologies and moon-shot ideas; they help us stay up-to-date with the world and think 10x. At DoorDash, we’re constantly thinking of ways to improve the customer experience, from reducing delivery times to increasing Dasher efficiency. Algorithms and artificial intelligence help lay the foundation for delightful customer experiences, and for this hackathon, we wanted to see if more advanced techniques could further improve our product. Our team of six applied an artificial intelligence technique called reinforcement learning to the assignment problem at DoorDash, beating our production algorithm in a simulation environment with both quicker delivery speeds and higher Dasher efficiencies. In this post, we will describe what that means and how we accomplished it.

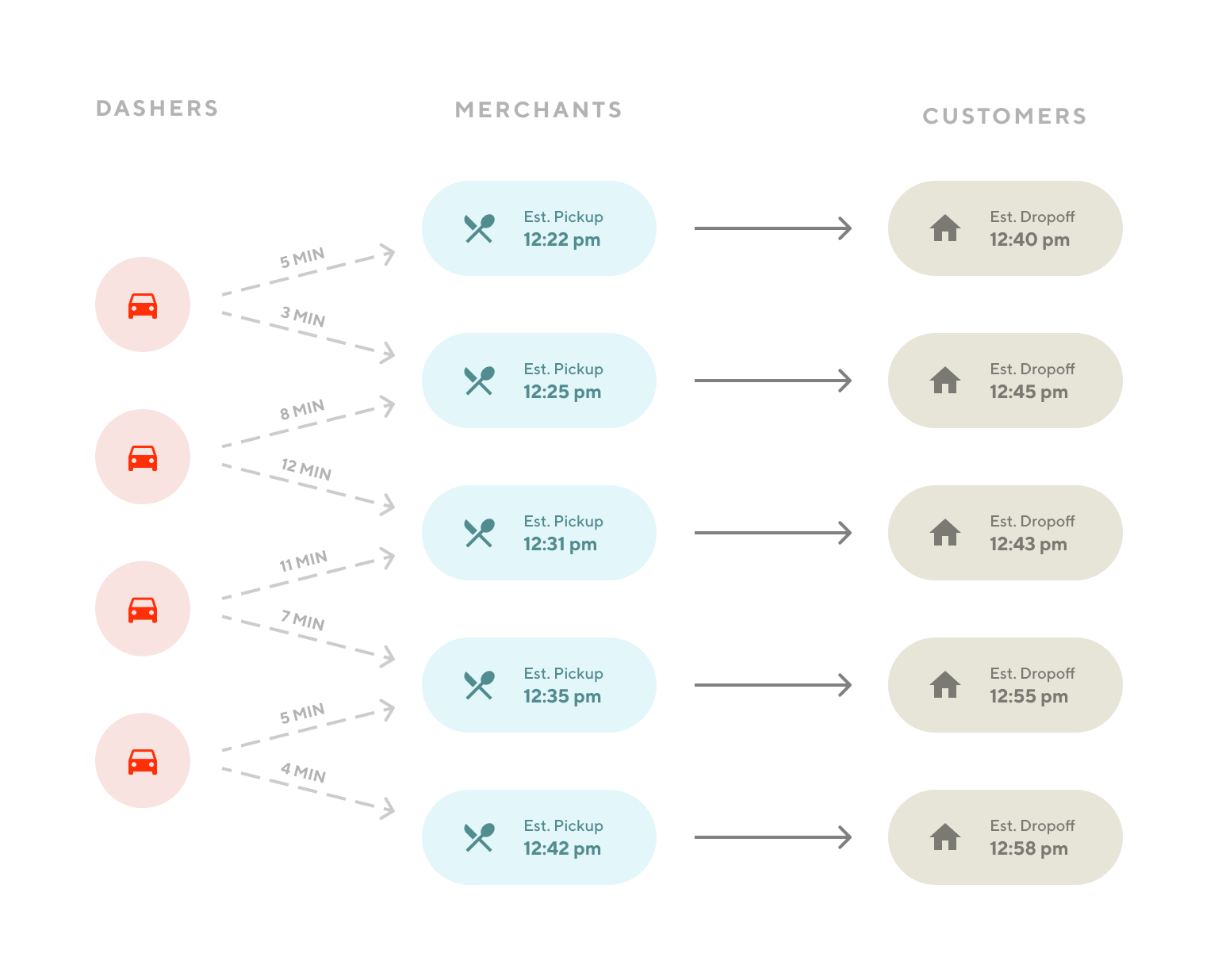

What is the assignment problem at DoorDash?

DoorDash provides a real-time platform for fulfilling consumer demand for merchant goods via a flexible Dasher fleet. Within DoorDash, the logistics team focuses on the magic that happens behind the scenes from when a consumer submits an order to when they receive that order at their door, including everything from supply/demand forecasting to automated delivery monitoring. The assignment problem is one specific area within logistics that deals with the following question: which Dasher should fulfill which delivery? We aim to make Dasher-delivery assignments that yield quick delivery speeds and healthy Dasher efficiency, where efficiency is number of deliveries performed by Dasher per unit time. In making these decisions, we need to consider many factors, including but not limited to:

- Consumer quoted times

- Estimated order ready times

- Travel estimations

- Routing (for multi-delivery assignments)

- Dasher utilization (percentage of time a Dasher is actively working on a delivery over an entire shift duration)

The assignment algorithm at DoorDash has been crafted over the years to consider all these factors and more, in order to best serve consumers, merchants, and dashers. However, given the breadth of the assignment problem, and the fact that we don’t have ground truths for what the best assignments are, improvements to the algorithm do not always come easily. When Hackathon XIII rolled around, we wondered, “Could an artificially intelligent assignment algorithm learn to improve itself?”.

What is reinforcement learning?

Reinforcement learning is one of the most popular and powerful artificial intelligence algorithms today. Instances of reinforcement learning have reached mainstream news, such as AlphaGo, the reinforcement learned computer program that defeated the world’s top Go player. In short, the goal of reinforcement learning is to learn the best action given a state of the environment, in order to maximize the overall rewards. Here are the fundamental concepts in reinforcement learning, summarized in Figure 2.

State: The current status of the environment. It represents all the information needed to choose an action.

Action: The set of all possible moves at a state.

Reward: The feedback as a result of the action. Note that rewards can be immediate or delayed.

Policy: The strategy used to choose an action at each state.

Agent: The entity that takes actions and tries to learn the best policy.

Environment: The world that the agent interacts with.

Here’s an example application to illustrate these concepts. Let’s say we are designing a delivery robot and teaching it to pick up delivery items while avoiding pedestrians. In this case the robot is an agent trying to learn the optimal policy, i.e. optimal action in each state. The states can be the area that robot is operating in, with the items and pedestrians at various locations, and the actions can be move left, right, forward, or backward. The robot receives a positive reward when it picks up an item, a negative reward when it runs into a pedestrian, and no reward otherwise. With these settings the robot can learn the optimal policy to pick up all the items in a way that maximizes the reward.

The goal of reinforcement learning is to find the optimal policy. This is not trivial since unlike Markov Decision Processes, the rewards and transition probabilities between states are unknown, as seen in Figure 3. For this reason, there are many techniques to either obtain this information (model-based) or obtain the optimal policy directly (model-free), such as Monte Carlo, Bootstrap, and SARSA. The most commonly used is Q-learning, which is a model-free, off-policy method that can directly give us an estimate of the Q function to find the optimal policy.

It is worth mentioning that to use Q-learning, we need to collect data by following an exploration policy. When we are more concerned with learning about the world than maximizing utility, we can choose a no-exploitation, all-exploration policy, which explores the action space by always choosing a random action. When we care more about utility, we need to balance exploration and exploitation, so that we can reap the benefits of what we’ve learned while still not being blind to opportunities that we haven’t yet encountered. One common approach in the second case is the epsilon-greedy algorithm, which explores with probability epsilon and exploits with probability 1-epsilon.

In practice, when there are a large number of states, the state space is featurized and function approximation is used to determine the Q function. This makes the Q function a model instead of a lookup table. Oftentimes, handcrafting features is difficult, so deep learning models like fully connected or convolutional neural networks are used to represent the Q function. This approach is known as Deep Q-Network (DQN) and is very useful when feature dimensionality is high and data volume is also high.

Reinforcement learned assignment

Now we will discuss how we applied reinforcement learning to the DoorDash assignment problem. To formulate the assignment problem in a way that’s suitable for reinforcement learning, we made the following definitions.

State: The outstanding deliveries and working Dashers, since they represent the current status of the world from an assignment perspective. Note that this means that the state space is practically infinite, since deliveries and Dashers individually can have many different characteristics (pick up location/time, drop off location/time, etc.) and there can be many different combinations of deliveries and Dashers.

Action: The most natural choice is the assignment of Dashers to deliveries. However, even with just 15 deliveries and 15 Dashers, the total number of combinations exceeds one trillion! Therefore, to significantly reduce the action space, we defined the actions to be different variants of the assignment algorithm with different parameters. This can be thought of as intelligent parameter tuning where the model learns which parameters are best for a given state of deliveries and Dashers.

Reward: Combination of delivery speeds and Dasher efficiency. We want deliveries to reach customers as quickly as possible while utilizing Dashers as efficiently as possible. This translates to maximizing delivery speeds and maximizing Dasher efficiency. The reward also includes a penalty for undelivered deliveries, to ensure that all the deliveries are actually assigned to Dashers and delivered to consumers.

With these concepts defined to fit reinforcement learning, we now needed to implement the two key components to actually perform reinforcement learning, the environment and the agent.

Environment: We need a system that can output states (deliveries and Dashers) and rewards (delivery speeds and Dasher efficiencies), as well as take in actions (variants of assignment algorithm) and subsequently update the states and rewards. Our production assignment system fits the bill, but we run the risk of hurting our actual deliveries and customers, since the agent might choose bad actions as it learns via an exploration policy. Fortunately, we already have a simulator for our assignment system that can take in an assignment algorithm and produce simulated assignments that mirror our production system. This assignment simulator is used for obtaining offline results before trying online experiments for the assignment system. Therefore, we used this simulator as our environment, allowing us to train our reinforcement learning model on states/actions/rewards that are accurate to our production system without impacting our customers.

Agent: We chose a deep neural network as the agent, since it makes sense to use a model for the Q function and featurize the states into high dimensional vectors, given our infinite state space. More details about this featurization and model will be covered in the next section.

A high level summary of our problem formulation can be found in Figure 4. In summary, the assignment simulator outputs the state, which the agent uses to choose a variant of the assignment algorithm. The assignment simulator runs the chosen algorithm and outputs the new state and the reward, which are both passed back to the agent. This cycle repeats at a preset time interval.

For the actual implementation, we wrapped the DoorDash assignment simulator into an OpenAI Gym environment and used Keras RL for the agent and training, as the two libraries are compatible out-of-the-box.

Deep agent

As previously mentioned, we used a deep neural network as our agent. Recall that the agent maps state and action pairs to rewards. Concretely, the network takes as input the state (represented as different features) and predicts the Q-value for each action, as seen in Figure 5.

For our problem, the features generated from the states are intended to capture the information about deliveries and Dashers that are useful for predicting the Q-value, which are future delivery speeds and Dasher efficiencies. Some examples of these features are the pick-up to drop-off distances, the ratio of deliveries to Dashers, and the estimated order ready times.

The model itself is a multi layer dense/fully-connected network. A few different model parameters were tried, but more thorough hyperparameter tuning and architecture exploration will be done in future work.

This model is trained using the deep Q-learning algorithm as presented by Mnih et al. in their Deep Reinforcement Learning paper. Details can be found in the paper, but the general idea is that the environment and agent are used to generate states, actions, and rewards that are stored as experiences. These experiences are then drawn from to create training samples, which the model uses to optimize towards the maximum Q-value.

Results

Evaluation was done by making assignments for one day of data in one region. We first obtained baseline results by running the assignment simulator with the default production assignment algorithm and obtaining the average delivery speeds and Dasher efficiencies.

Then to evaluate if our model did any better, we had our model pick the best assignment algorithm to use for each state over that same day and obtained the new average delivery speeds and Dasher efficiencies. These results show that the reinforcement learned model achieved on average a 6 second improvement in delivery speed and 1.5 second improvement in Dasher efficiency across all deliveries. This does not seem like much, but when millions of deliveries are involved, these seconds quickly add up. These results prove that reinforcement learning can help with the assignment problem.

Moving forward

We made some progress during the hackathon, but there is always more to do. Here are some ways we would like to improve the agent:

- More hyperparameter tuning, e.g. learning rate, hidden layer sizes.

- Adding more features, i.e. generating more features from the states.

- Structuring features in the form of a 3D grid by placing deliveries/Dashers in the grid based on latitude and longitude. The analogy is an image, which has a height, width, and depth. We can then try convolutional neural networks, which are popular for image based tasks, as the agent.

Conclusion

We have seen how applying reinforcement learning to the assignment problem at DoorDash has yielded an enhanced assignment algorithm. We believe reinforcement learning is a powerful tool that we can use to improve our on-demand logistics platform, and we are excited at the opportunity to further delight our customers using advanced artificial intelligence.

We would love to hear about your production applications of reinforcement learning. If solving these types of problems excites you, come join the team!

Acknowledgments

Thank you to the entire hackathon team for working on this project: Anne Zhou, Gary Ren, Ivy Wang, Richard Hwang, Sifeng Lin, Yixin Tang. And thank you hackathon committee for organizing another fun hackathon!