DoorDash engineering describes its three step process for safely migrating business logic as APIs.

Category Archives: engineering

Building a Gigascale ML Feature Store with Redis, Binary Serialization, String Hashing, and Compression

When a company with millions of consumers such as DoorDash builds machine learning (ML) models, the amount of feature data can grow to billions of records with millions actively retrieved during model inference under low latency constraints.

Uncovering Online Delivery Menu Best Practices with Machine Learning

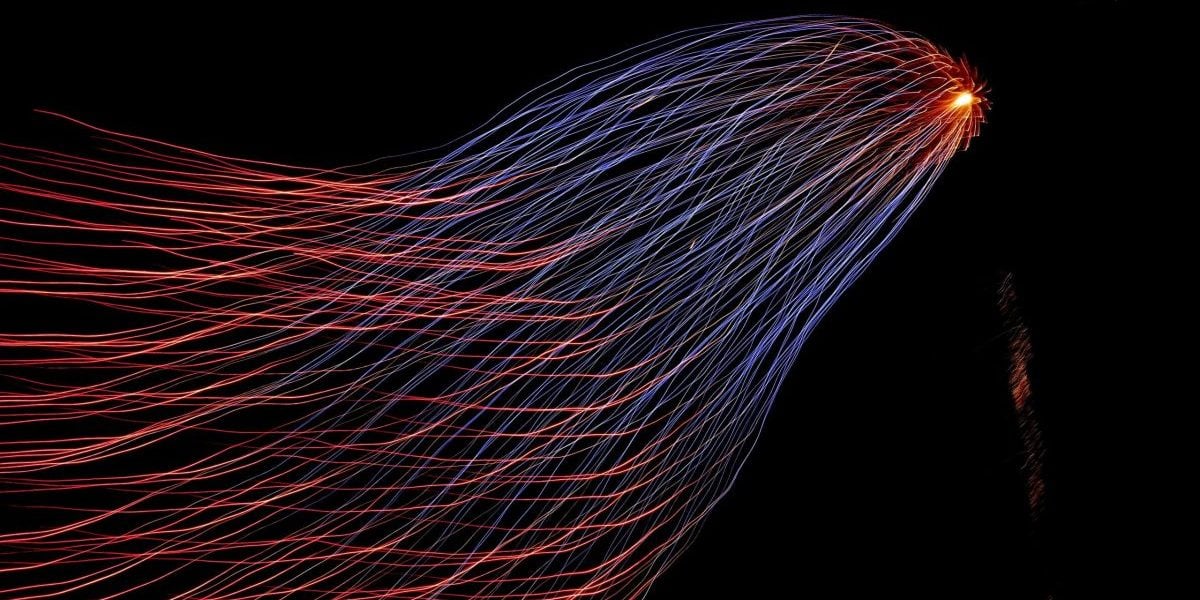

Photo Credit: Jeff Marini

Restaurants on busy thoroughfares can use many elements to catch a customer’s eye, but online ordering experiences mostly rely on the menu to generate sales.

Hello Seattle: DoorDash Expands its Engineering Footprint to the Pacific Northwest

The newest DoorDash engineering office is seeking engineering talent to support its Drive and DashMart business lines.

Hot Swapping Production Tables for Safe Database Backfills

Making changes to data tables can disrupt production systems that need to be operating 24/7. DoorDash engineering explains how to hot swap tables so as not to interfere with production systems.

Building an Image Upload Endpoint in a gRPC and Kotlin Stack

When moving to a Kotlin gRPC framework for backend services, handling image data can be challenging.

Solving for Unobserved Data in a Regression Model Using a Simple Data Adjustment

Making accurate predictions when historical information isn’t fully observable is a central problem in delivery logistics.

Using Display Modules to Enable Rapid Experimentation on DoorDash’s Homepage

Online companies need flexible platforms to quickly test different product features and experiences.

Improving Online Experiment Capacity by 4X with Parallelization and Increased Sensitivity

Data-driven companies measure real customer reactions to determine the efficacy of new product features, but the inability to run these experiments simultaneously and on mutually exclusive groups significantly slows down development.

Integrating a Search Ranking Model into a Prediction Service

As companies utilize data to improve their user experiences and operations, it becomes increasingly important that the infrastructure supporting the creation and maintenance of machine learning models is scalable and will enable high productivity.